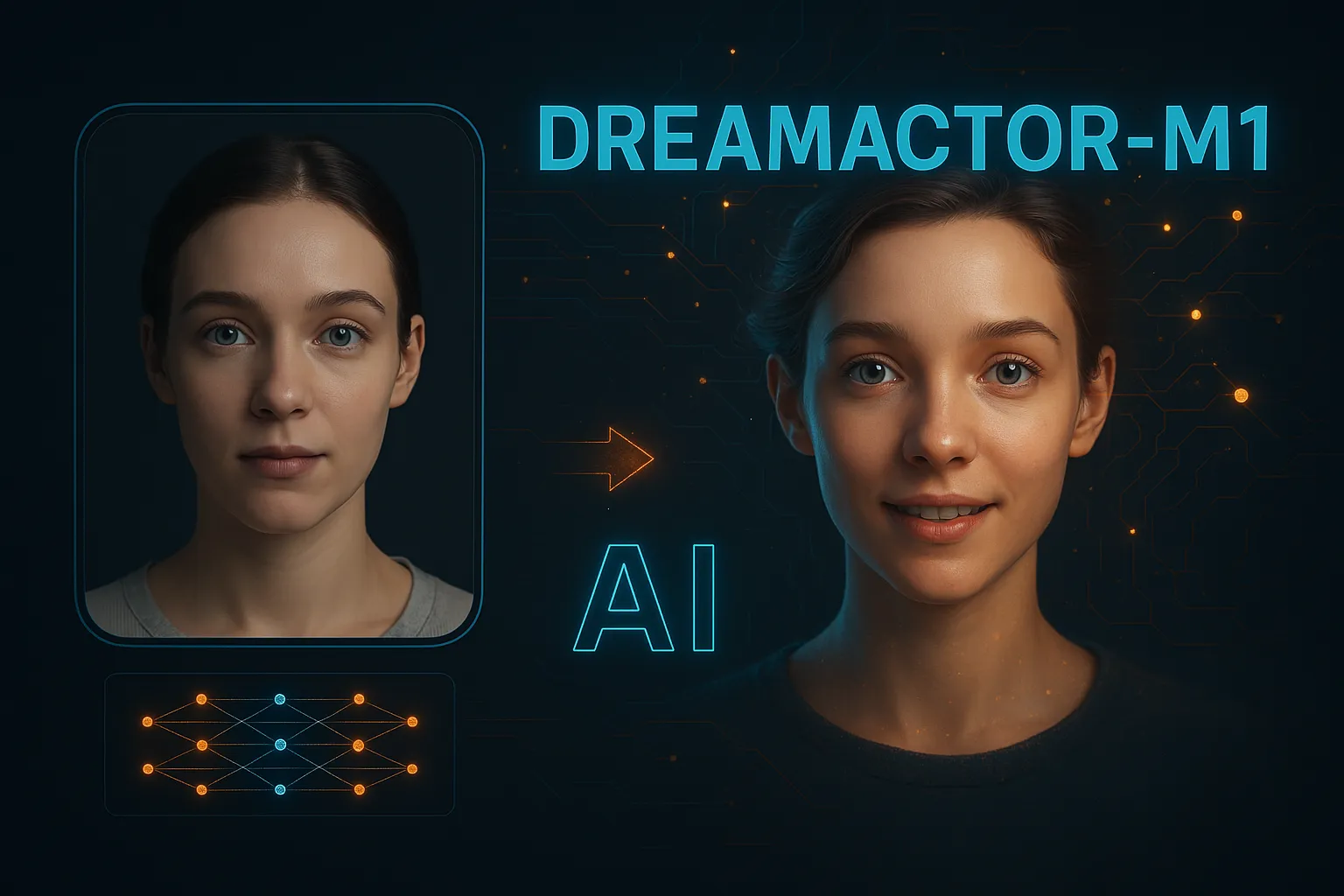

In the rapidly evolving field of artificial intelligence, ByteDance‘s DreamActor-M1 has emerged as a groundbreaking framework that transforms static images into dynamic, realistic human animations. This article delves into the features, architecture, applications, and ethical considerations of DreamActor-M1, highlighting its impact on the future of digital content creation.

Introduction to DreamActor-M1

Developed by ByteDance, DreamActor-M1 is an AI-powered model designed to animate human images with lifelike expressions and movements. By leveraging advanced diffusion transformer (DiT) architecture and hybrid guidance systems, it addresses limitations in previous animation models, offering fine-grained control, adaptability across various scales, and long-term temporal coherence. (Source)

Key Features of DreamActor-M1

1. Hybrid Guidance System

DreamActor-M1 integrates multiple control signals to achieve precise and expressive animations:

- Implicit Facial Representations: Capture subtle facial expressions and micro-movements.

- 3D Head Spheres: Model head orientation and movements in three dimensions.

- 3D Body Skeletons: Provide guidance for full-body poses and movements.

This combination ensures realistic and identity-preserving animations. (arXiv)

2. Multi-Scale Adaptability

The model is trained using a diverse set of inputs, enabling it to handle various image scales, from close-up portraits to full-body shots. This adaptability ensures consistent performance across different animation scenarios. (arXiv)

3. Long-Term Temporal Coherence

DreamActor-M1 maintains visual consistency over extended video sequences by integrating motion patterns from sequential frames with complementary visual references. This approach prevents issues like flickering or texture inconsistencies. (Source)

Technical Architecture

The architecture of DreamActor-M1 comprises several components that work in unison to produce high-quality animations:

- Motion Guidance Modules: Extract control signals from driving videos, including implicit facial motion, 3D head spheres, and 3D body skeletons.

- ReferenceNet: Generates multi-view pseudo-references to provide complementary appearance guidance, ensuring consistency in unseen regions.

- Diffusion Transformer (DiT): Processes the extracted signals and references to generate temporally coherent and expressive video sequences.

This architecture allows for holistic control over facial expressions and body movements, resulting in animations that are both realistic and expressive. (arXiv)

Applications of DreamActor-M1

DreamActor-M1’s capabilities open up a wide range of applications across various industries:

- Filmmaking: Directors can animate characters from static images, streamlining the production process.

- Social Media: Users can transform portraits into lively, animated clips, enhancing engagement.

- Education and Virtual Reality: Facilitates the creation of immersive content for learning and interactive experiences.

These applications demonstrate the model’s versatility and potential to revolutionize content creation. (AI Base)

Ethical Considerations

While DreamActor-M1 offers significant advancements, it also raises important ethical questions:

- Consent and Identity Misuse: The ability to animate images without the subject’s consent could lead to unauthorized use and privacy concerns.

- Deepfake Risks: The realism of the animations makes it challenging to distinguish between AI-generated and real footage, potentially facilitating misinformation.

- Need for Transparency: It’s crucial to disclose AI-generated content to maintain trust and prevent deception.

Addressing these issues is essential to ensure responsible use of the technology. (Analytics Vidhya)

Conclusion

DreamActor-M1 represents a significant leap in AI-driven human image animation, offering unprecedented control and realism. Its innovative features and versatile applications position it as a transformative tool in digital content creation. However, it’s imperative to navigate the ethical implications carefully to harness its potential responsibly.

Watch DreamActor-M1 in Action

For a visual demonstration of DreamActor-M1’s capabilities, check out this video:

ByteDance DreamActor-M1: Video Generation Model for Movies

Related Tools You Might Like

Explore more cutting-edge AI tools for creators at ToolBoost AI.

Learn more about similar AI video generators:

Stay ahead in the world of AI animation and content creation!

Frequently Asked Questions (FAQ)

What is DreamActor-M1?

DreamActor-M1 is an AI model developed by ByteDance that transforms static human images into realistic animated videos using hybrid motion guidance and diffusion transformer architecture.

Is DreamActor-M1 free to use?

As of now, DreamActor-M1 has not been officially released for public use. Researchers can explore its features through the official project page.

Can DreamActor-M1 be used commercially?

Its commercial use depends on ByteDance’s licensing and terms of service. Always check the most up-to-date usage policies on their official platform or publications.

What makes DreamActor-M1 different from other AI video generators?

Unlike traditional models, DreamActor-M1 uses a multi-level hybrid guidance system that includes implicit facial motion, 3D head spheres, and body skeletons for more accurate and realistic animations.

Where can I explore similar AI tools?

You can find more reviews and tools on platforms like ToolBoost AI, where the latest AI video generation solutions are featured.

Is the output from DreamActor-M1 ethical?

That depends on how it’s used. The model raises concerns about deepfakes and consent, so it’s crucial to apply it responsibly and ethically.